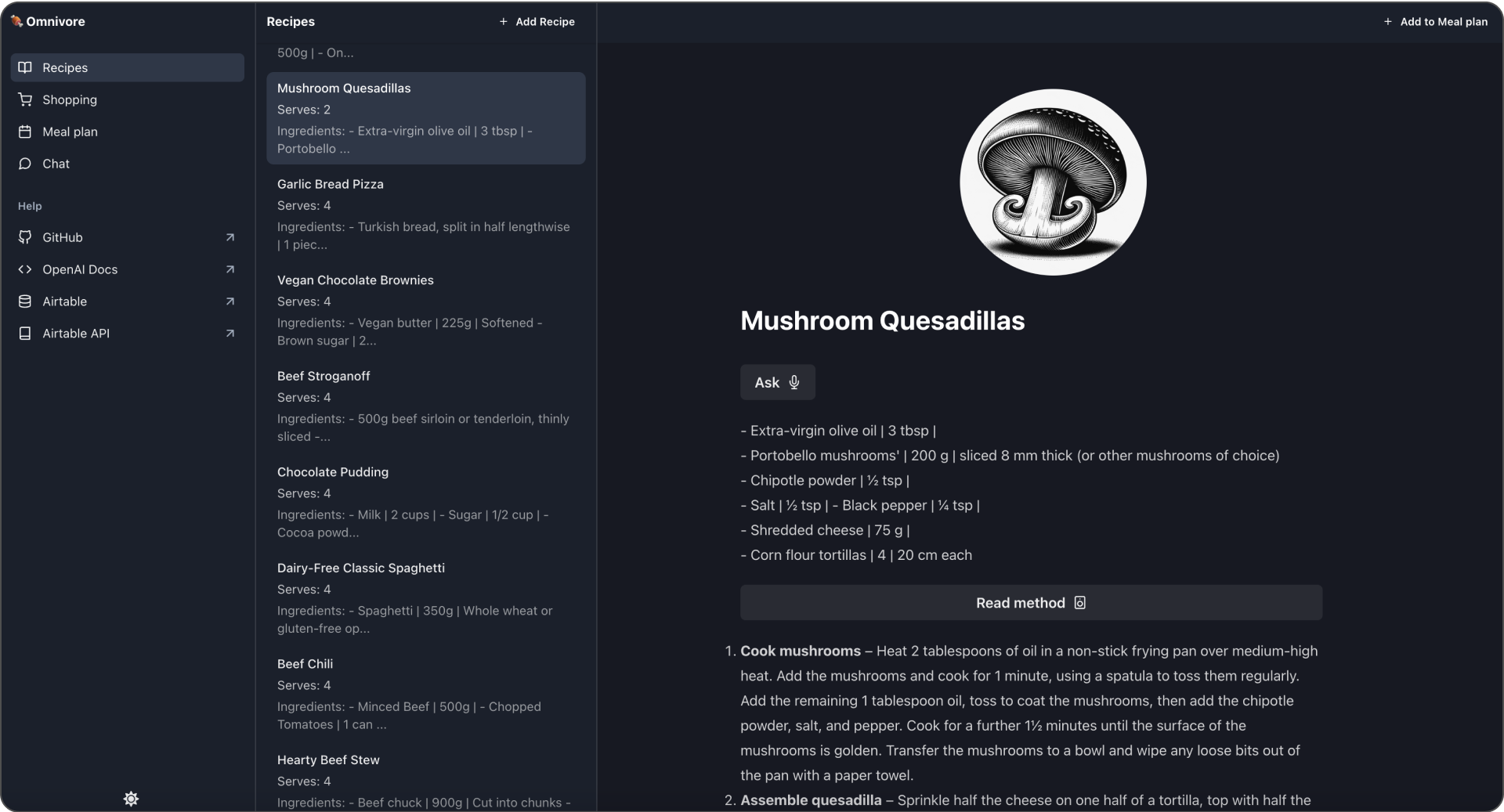

🍐 Omnivore

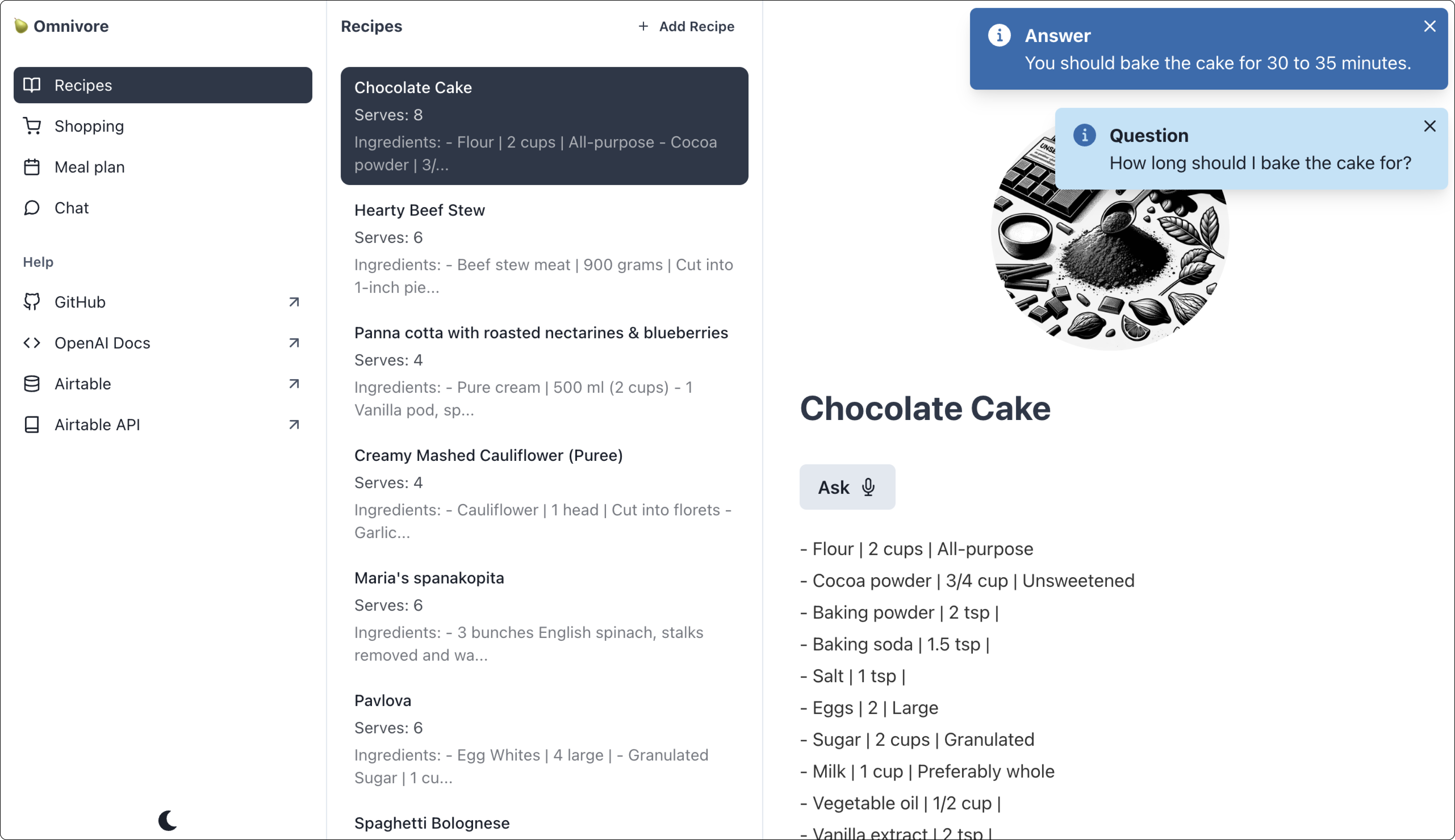

Omni listening to a question and responding with text to speech.

What is it?

Omnivore is an open source meal planning app I'm building to learn what an AI app can be.

I'm building with OpenAI's platform and it's latest model GPT-4o. Omnivore with remarkably little code, can do the following things today:

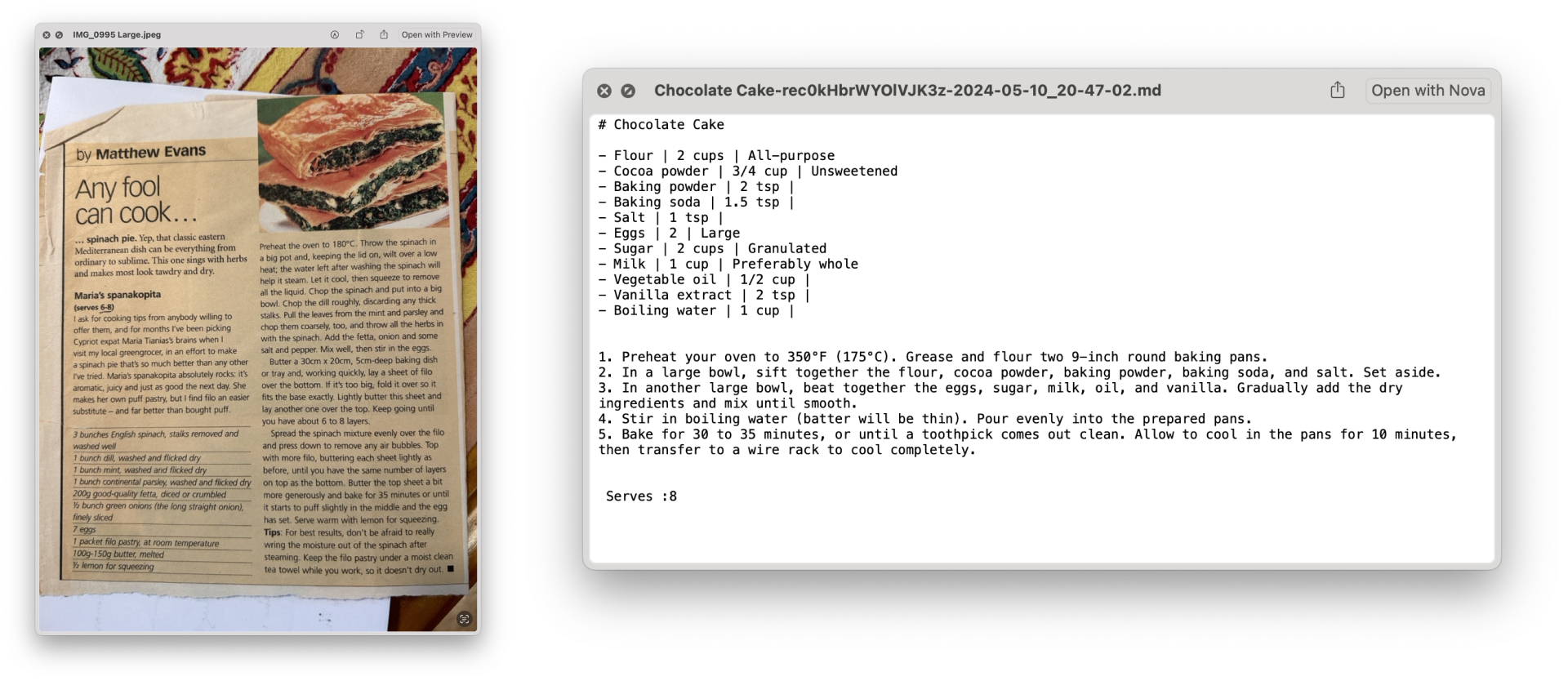

- Import a Recipe from a Photo: Uses GPT-4o Vision capabilities to import your favorite dishes.

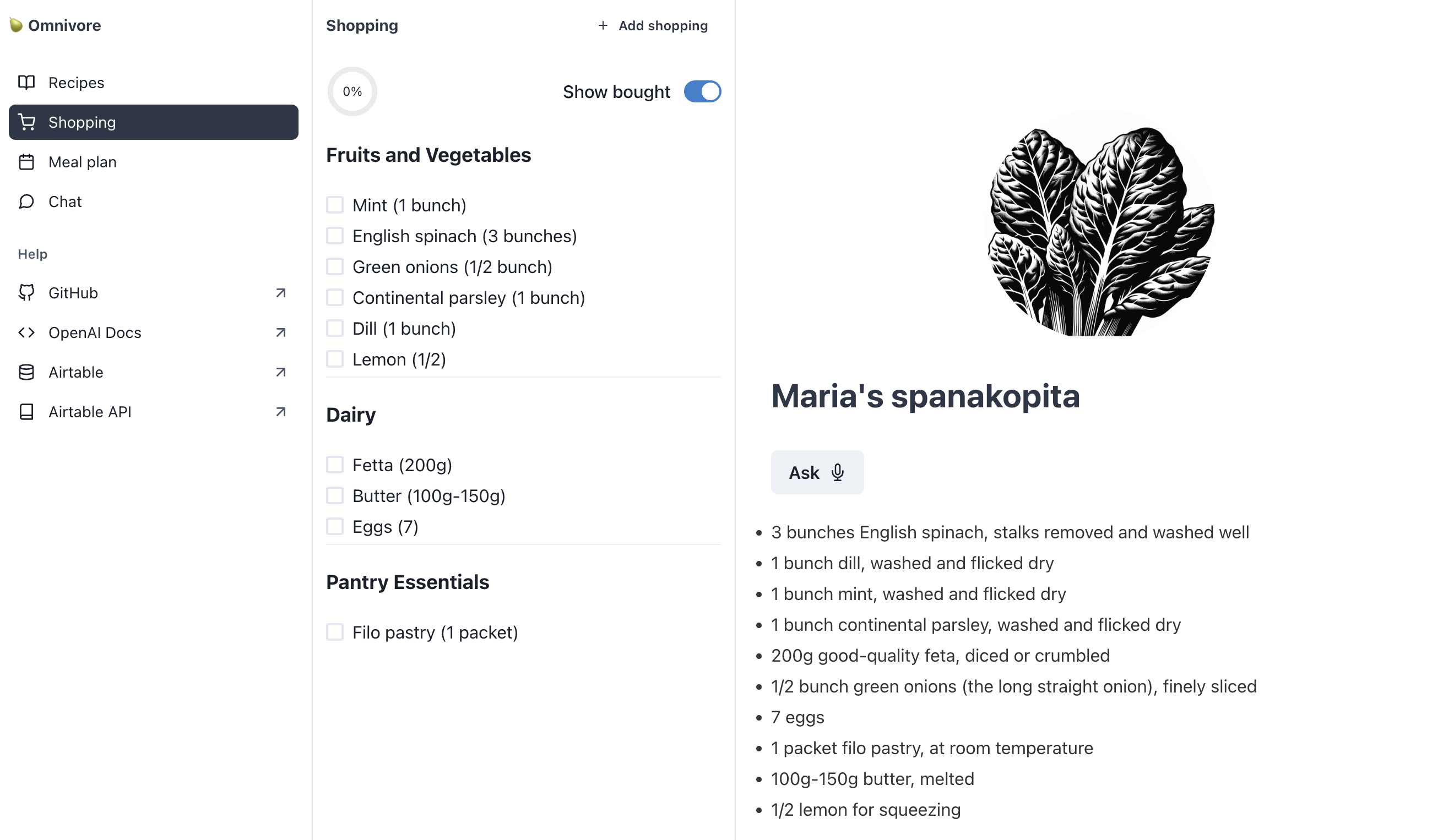

- Create a Smart Shopping List and Meal Plan: Leverage GPT-4o JSON mode for organized and efficient meal planning.

- Voice Interaction: Hear and respond to spoken questions using GPT-4o Chat completion, Whisper, and Text to Speech.

- Recipe Suggestions: Get personalized recipe suggestions from your collection using the GPT-4o Assistant API and File search.

- Generate Recipe Illustrations: Created with DALL-E following a requested style for uniformity.

Now how consistently Omnivore can do all these things quickly, accurately, consistently and cheaply enough is an open question. Perhaps the big question about AI. So let's see if Omnivore can make it more digestible.

Why?

Well anybody who knows me, would agree that I've been totally insufferable since GPT4 came out, turning every topic and conversation towards AI. On some level I hope this experience grounds my expectations, and reduces the feeling of vertigo that the torrent of AI news, papers, releases and brand names. I like having this project to focus on.

My experience with AI so far has been the amazing ChatGPT and the occasional glimpse of an AI feature ✨ sprinkled into an existing product. I haven't seen many apps built from the ground up for AI, that aren't just clones of ChatGPT. The recent demos of GPT4o have me convinced that there's plenty to discover and rethink.

There's plenty of resources from OpenAI and others to get you building a feature from a specific parts of the API. I've found there are very few examples of a whole app, with audio, vision and text understanding to pick apart, as a user experience. Not just a bunch of tech demos.

I chose meal planning as a user experience because I previously developed a meal planning app called Oma to learn React 8(!!) years ago. I thought it would be interesting to contrast the functionality and implementation of the two apps.

I also enjoy cooking and need to meal plan. Testing the app allows me to see how well the AI services work over time. Or not work! I kind of want to have some skin in the game. I want to mess up a recipe because I overestimated Omni's ability to convert units. That feels fair!

How Does It Work?

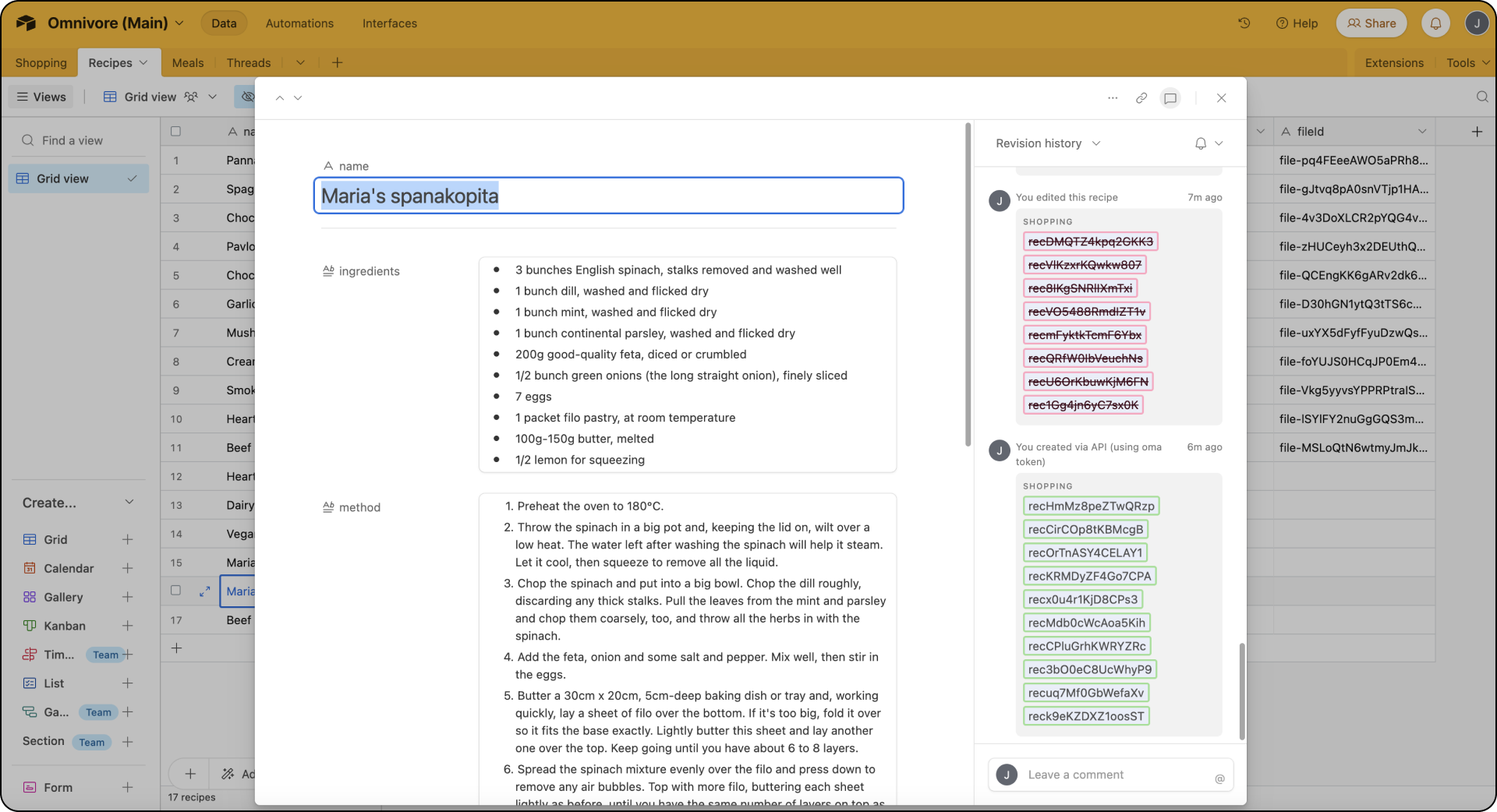

Airtable makes a great database for Omnivore.

Bring your own data

When you set up Omnivore, you need to set up an Airtable base, which provides the database functionality and provides an API. I did this to reduce the complexity in getting it up and running. Happily, I think the feeling of Data Ownership this step provides is important. I think the user's understanding of what data Omni can and can't see, is important in AI apps. The separation of concerns may prove critical for users consenting to interacting with AI.

Thanks to Airtable, you can see the changes to the records, which is helpful when debugging a prompt. You can also add comments that could be used for a future prompt improvement workflow.

The newspaper clipping can be easily upload to Omni. Omni also outputs Markdown versions of your recipes for File search.

The imported recipe and the shopping list with smart categories.

The ultimate declarative functions

Many of the underlying functions in the app are just chat completion prompts. I describe the inputs, the outputs, and Omni predicts the response and provides it in a JSON format, which gets saved to Airtable.

Here's an example of the prompt for making a recipe:

You are a helpful assistant. Analyse the contents of the image of a cookbook page and

describe the text found within it in structured JSON format.

Remember the fields are name, ingredients, method and serves.

{ "name": "Chocolate Brownies",

"ingredients": "- Butter | 2 sticks| Softened - Brown Sugar | 1 cup - Sugar | 1/2 cup white ",

"method": "1. Mix together dry ingredients with wet ingredients slowly. Usually I add 2 cups....",

"serves": 4

}

Name, not Title or Recipe title. It must be name. serves is a number, not a string .e.g 4 not "4"

Can you always use metric measurements.

Note how the fields ingredients and method use markdown.These prompt functions are the ultimate declarative programming, if you're generous on how much they cost, how long they take, and if they follow the requirements. Omnivore is a fun way to let you play around, in the kitchen no less, where you can weigh up these obvious trade-offs. For me, this is worth exploring because I love the idea that over time the user can craft their perfect prompt, like malleable software. For example, they may add a line that says, always double the amount of salt required by the recipe. Or that they don't have a fan forced oven. Augmenting how this function works.

At the very least, with prompts you can see how we can prototype non-trivial features and evaluate if they’re worth building as an algorithm. That’s an amazing proposition to me, almost like an instant prototype.

Mushroom mode

GPT and Me

I selected TypeScript, React, and Chakra UI for the user interface. While these are tools I know well, the workflow was different this time, with every first pass of a component or layout starting out as thread in ChatGPT. I'd start the convo with snippets of code from the repo or external docs to help it reason.

We’re going to create a node script that does the following

- Get a list of recipes from Airtable

- Saves them into markdown files on our computer

- Uploads them as files to open ai

- Attach the files to the assistant

Here’s some code to get you started

const vectorStoreId = await getOrCreateVectorStore(); // we will build this next

// upload using the file stream

const openaiFile = await openai.files.create({

file: file,

purpose: "assistants",

});

// add file to vector store

await openai.beta.vectorStores.files.create(vectorStoreId, {

file_id: openaiFile.id,

});

Usually with a side project, you get burnt out solving bugs, refactoring code, grinding between docs and old GitHub issues. Pairing with ChatGPT is a huge improvement, and the project has been a total pleasure; I get a good sense of flow. I love finding and combining code snippets to make a clear prompt.

At first I worried that by relying so heavily on ChatGPT I'd end up with code that hard to maintain or reason about. That hasn't been the case, and maybe speaks to my underlying experience and the quality of the code output, with comments. I think just forcing myself to sit down, right the requirements so their clear prompts would improve my own code, as often it's so tempting to just hop into the editors and start hacking away.

I’m looking forward to evolving the UI . As I bring in more features, I'm getting inspired on how to rework things to keep the context of the user's session linked, both in and out of the chat.

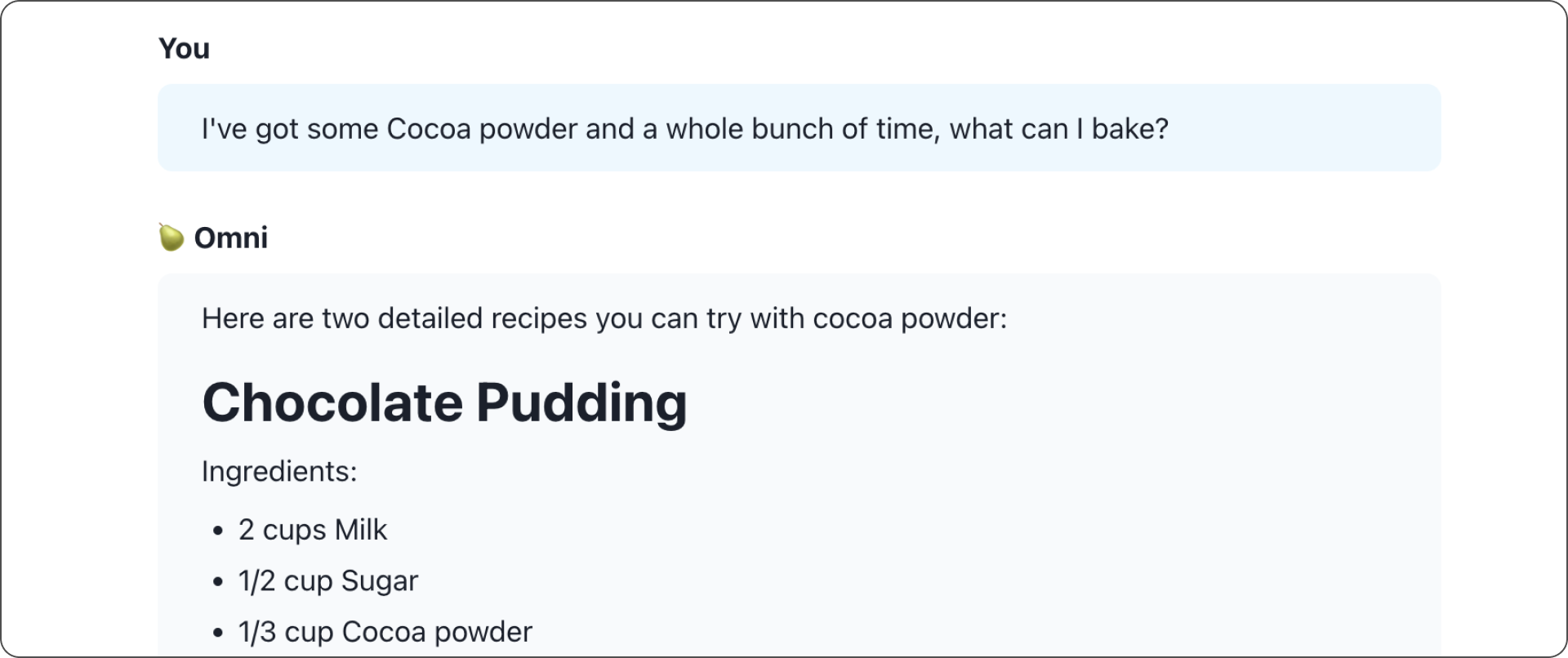

Omni, your recipe assistant.

Assistant

Omni is the assistant in the app, accessed via the Chat tab. Presently, we can upload the recipes to Omni as Files, so the suggestions are based on your collection and annotated thus. Omni also has function calling enabled and can add its own favorite recipes from their own training.

I was planning on not adding an explicit assistant to the app, as I wanted to explore patterns beyond a chat app. The UI for the chat isn't trivial, due to the streaming nature of the responses and function calling. Happily I found a nice example from OpenAI, which I was able to refactor with ChatGPT. I'm looking forward to rethinking how the Omni assistant is expressed in the app.

What’s next?

I’m open-sourcing the app! I’ve benefited hugely from open-source resources and technology; it feels really fulfilling to put something out there. I’d love to see your forks or contributions.

I really want to encourage you to play with these OpenAI APIs in this project or your own. Learning React 8 years ago helped me so much in my career and general fulfillment in my day job. I think these APIs will be even more impactful. I’m also thinking of how I can make the project more educational if you’re not a programmer.

Yesterday, OpenAI announced GPT-4o. I absolutely loved watching all the videos. I can’t wait to iterate the app further with the new video and audio APIs.