Tiny Talking Todos

Watch this introduction to Tiny Talking Todos (Talkies) - a voice-enabled task management tool for families.

My Role

Tiny Talking Todos, or Talkies is a nights-and-weekends passion project where I serve as both designer and developer. What makes this project unique is that it's my first AI-assisted development experience, blending traditional design skills with emerging AI capabilities.

Background

The project was informed by my experiments building an AI meal planner Omnivore and feedback from my AI workshops.

You can try Talkies with your own family here.

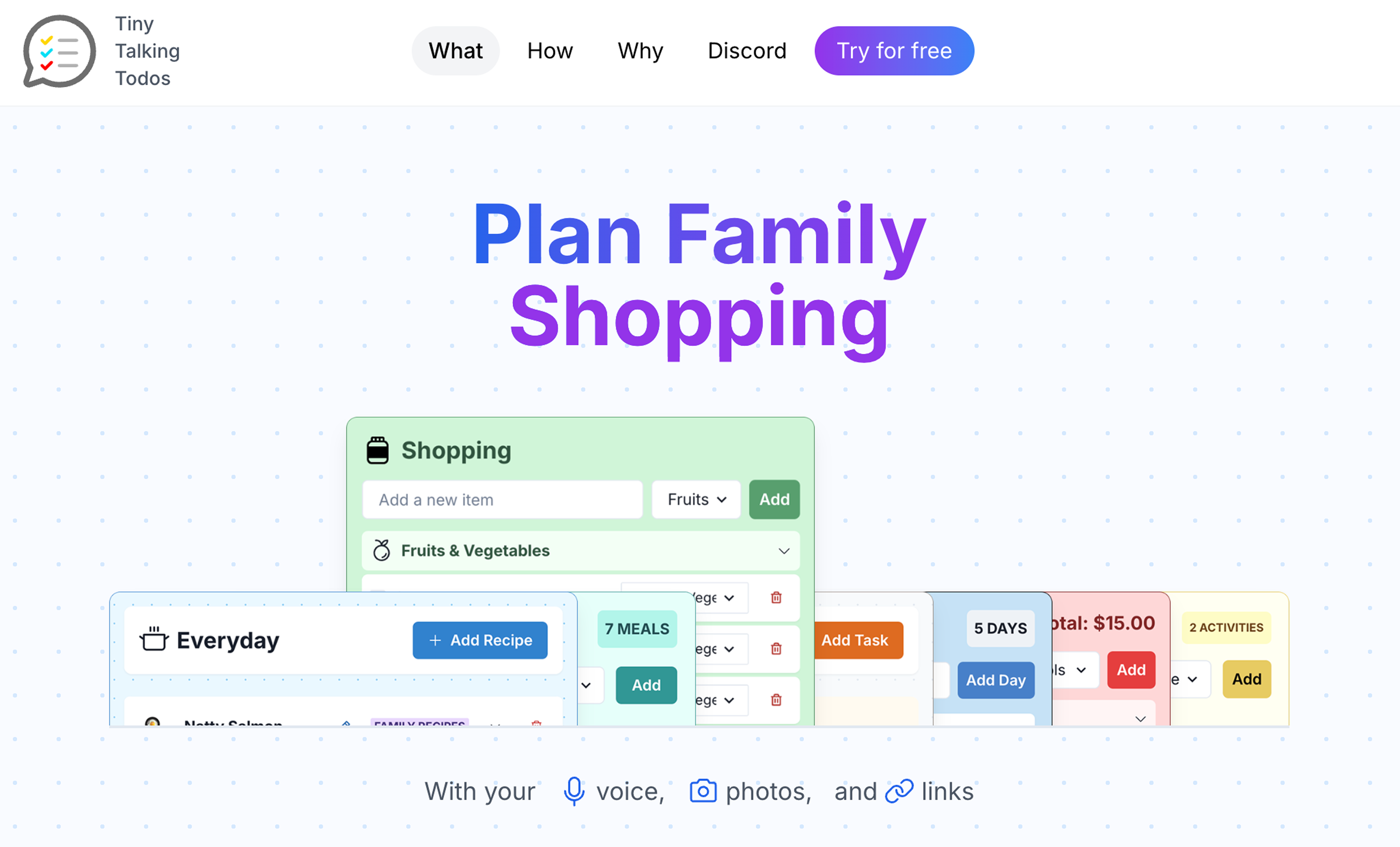

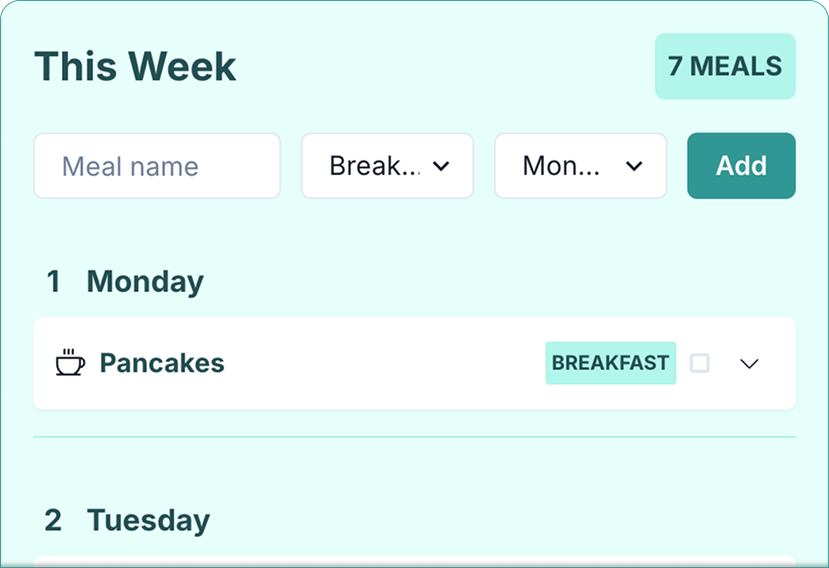

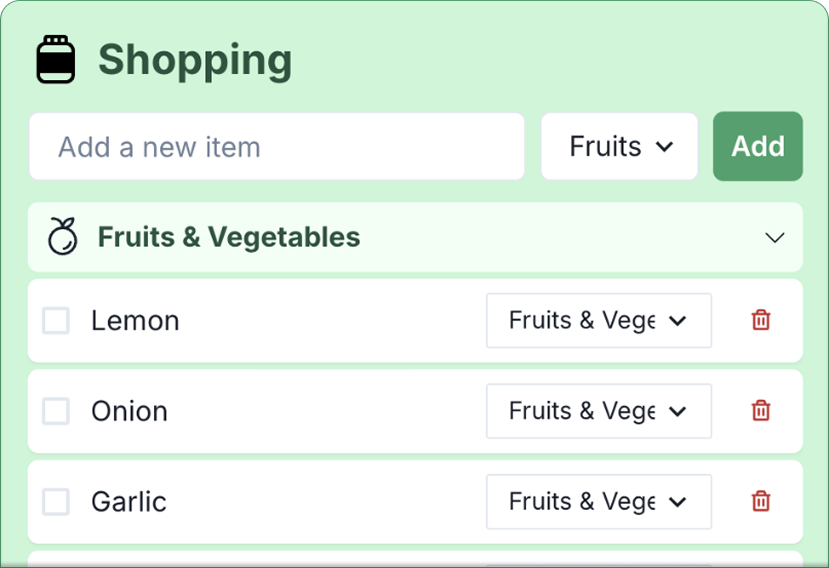

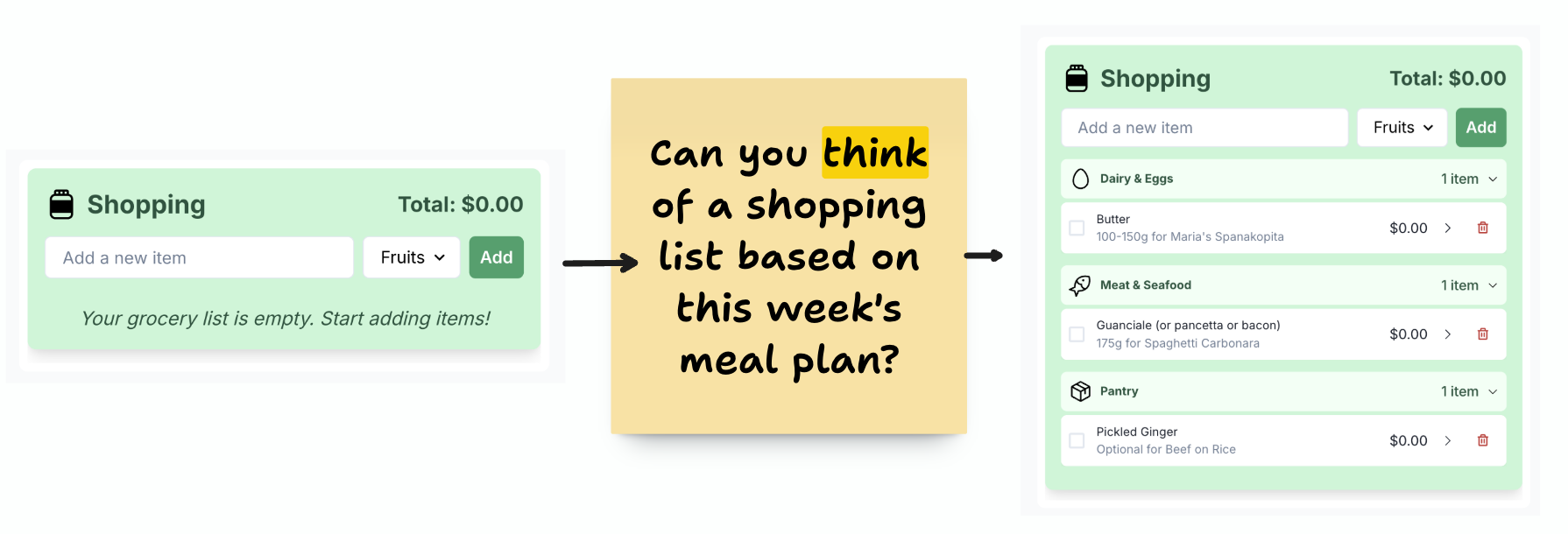

Compare lists to create context

Update your shopping list with your voice

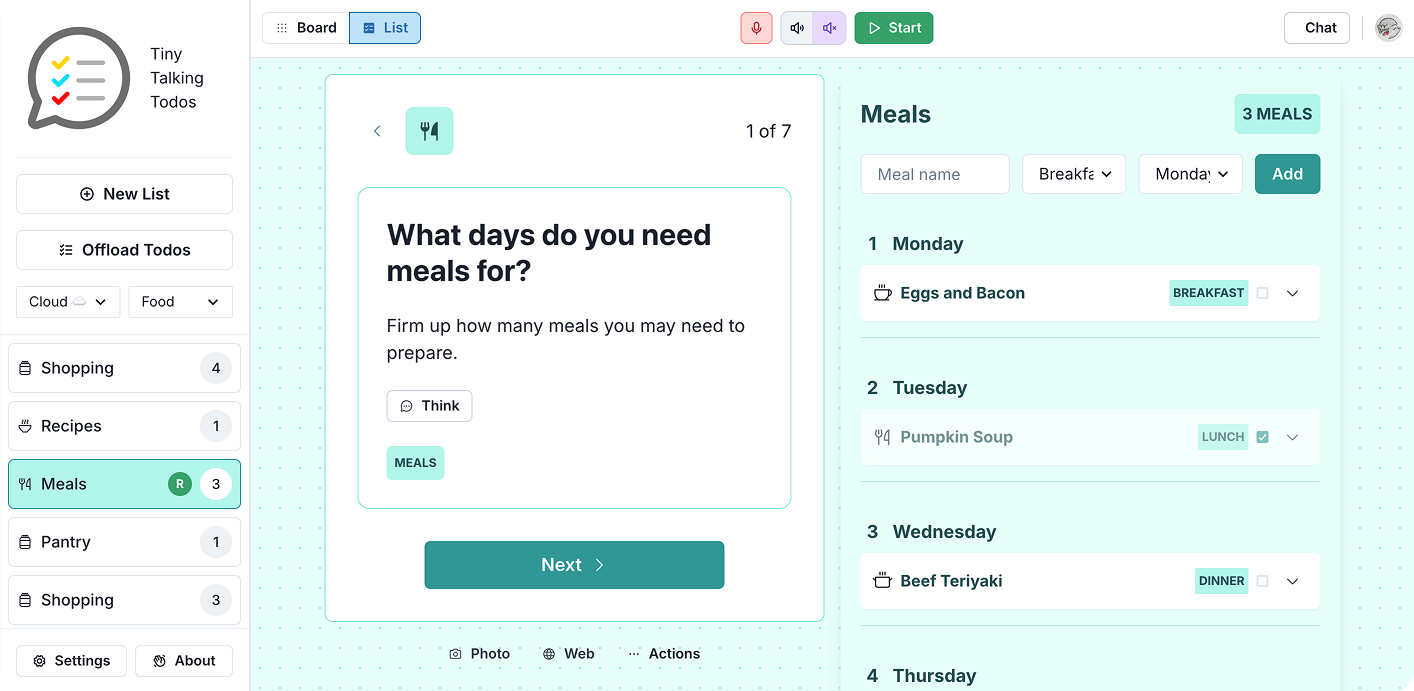

Guided meal planning

The Problem

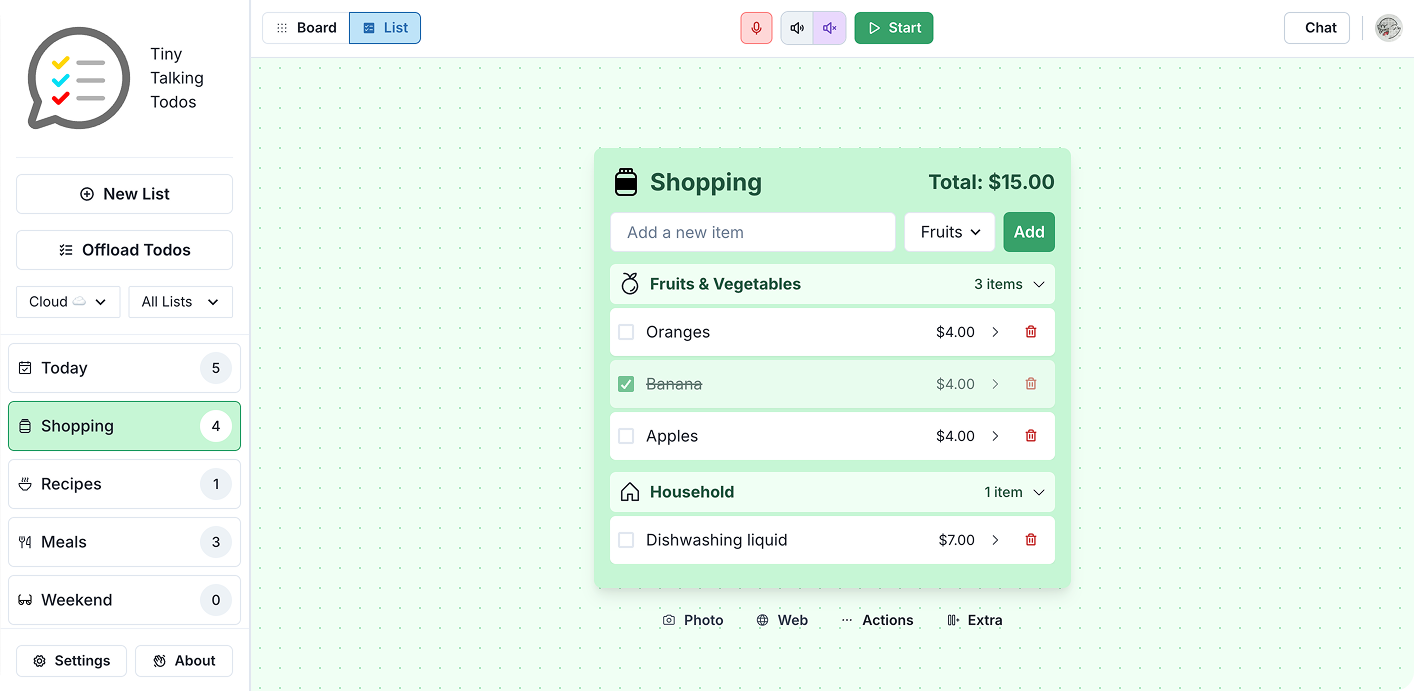

Many parents, particularly dads, struggle with managing the "mental load" of family life—the invisible work of meal planning, shopping lists, and scheduling. Talkies is an app designed to help visualize this mental load and empower families to share these responsibilities. With AI assistance and collaborative features, it specifically encourages dads to take a more active role in daily household management.

Have I watered that plant? I need to clean up the living room? Have we bought coffee beans? What's for dinner?

Design Process

Phase 1: Early Concept and Rapid Prototyping

In 2024 I ran a workshop where participants could talk to a shopping list using AI voice-to-text. Many participants asked how to create their own multimodal AI prototypes affter the workshop, and I didn't have a good answer. This inspired me to try and make a workflow where you could design your own list, ready to work with AI multimodal services.

I had a working version of this in about a week. It was very crude, but I had the basic loop working where you could co-design a list with an assistant in a browser, and then talk to it to create and edit todos. The only problem was that it barely worked - the voice interaction was clunky, and the list code generation was bug prone. And of course unclear what the utility of such a user interaction was.

Early prototype (3AM in fact) of a working version of Talkies.

Phase 2: Technical Evolution and User Insights

My curiosity was not sated in this first month. I wanted to build beyond a functional prototype, I wanted to build something I could put in front of users so I could get some real feedback. I made key technical decisions. I switched to TinyBase for better data management while keeping data local for privacy and added "Tiny" to the title of the project as a name check. I also built a custom voice pipeline to replace the initial solution, fixing speed and cost issues in early versions.

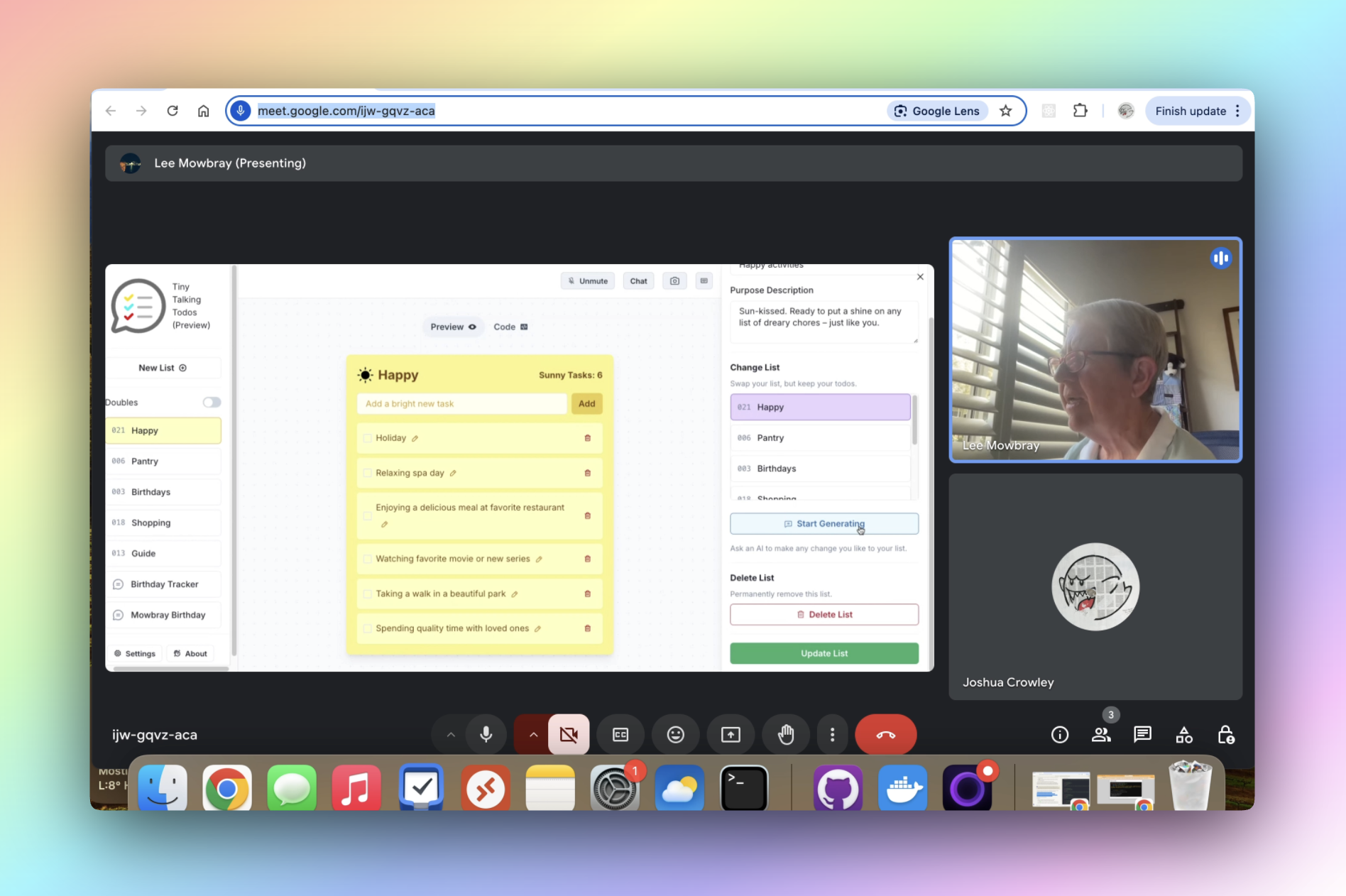

User testing with friends and family revealed people preferred a voice agent that mainly listened rather than one that was "very talkative and chatty." This helped refine the interaction model away from novelty toward utility. The ability to get an AI assistant to help change the functionality of the lists was interesting to users; we had fun in user testing sessions identifying unique requirements and building custom lists to suit, like a lunch box planner. But it was bug prone, as the AI models were still not strong enough to consistently meet a user's list requirements.

Early prototype demonstrating multimodal list interaction concepts

Phase 3: Feature Development and Technical Breakthroughs

About 6 months in, the project hit a milestone with Gemini 2.0 Flash in Dec 24, which finally delivered the multimodal capabilities I needed. This model family had the right balance of speed, cost, and accuracy for both the voice to text, text to voice and code generation for the lists. I felt like my paitence had been rewarded, that the models caught up to the requirments I had stuck to from the start of the project.

However, the app is still feeling like a neat demo – and not solving a burning problem for the users I test with. What could I do with this todo app?

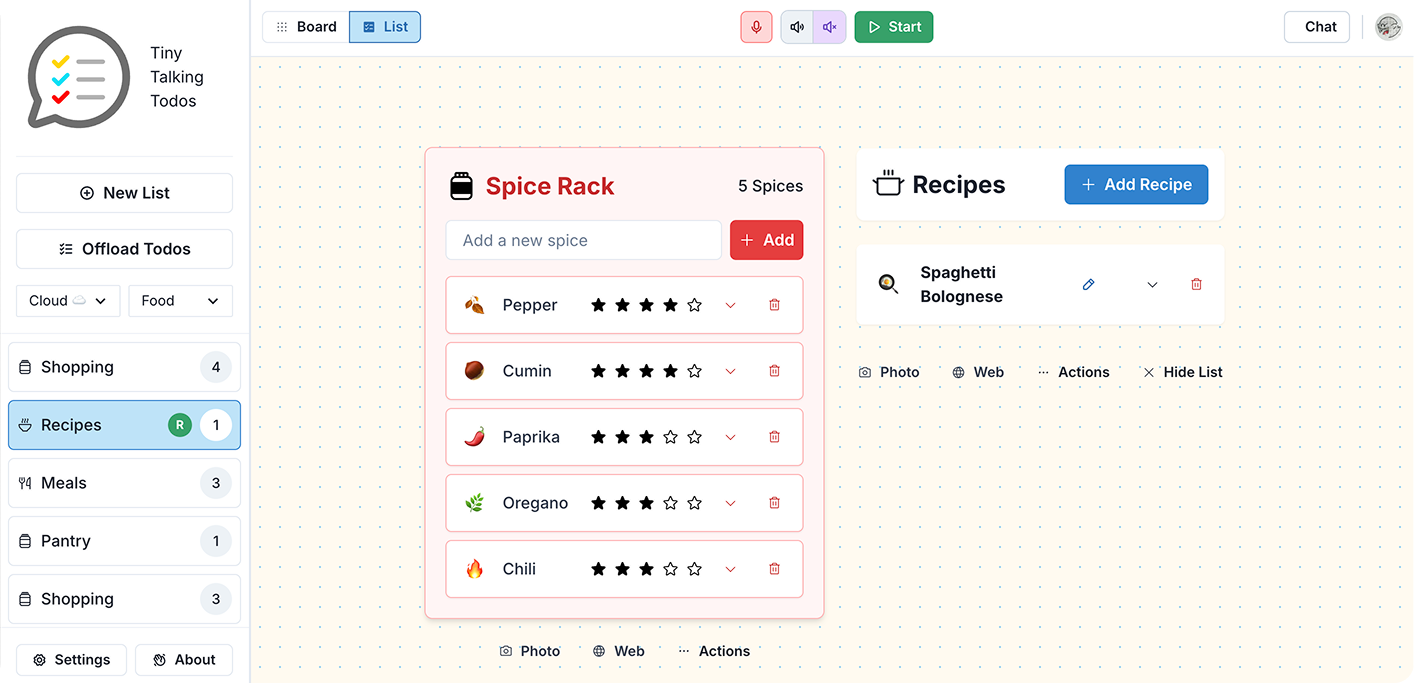

One emergent behavior of the app was that I could get multiple lists to talk to each other. I could ask a shopping list to update from a recipe list's todos and it would work pretty well. The behavior didn't scale perfectly, but it was a spark that stayed with me. This was possible because I had built a system for expressing the lists and their todos as context to the AI assistant. When more advanced reasoning models like OpenAI o1 and Google Gemini Flash Thinking became available, they found significant utility with the large context I could provide from my app. Now I feel like I could save families some time and head aches with my app.

However, I had one last hurdle: the app's UI focused on one list at a time. How can a user evaluate a suggestion that spans many lists? I turned to tldraw's implementation of a canvas editor, which was a perfect fit for allowing users to craft their own views of their lists.

The canvas feature allows users to organise lists visually, helping to distribute mental load. View a demo here

Learnings so far...

Malleable Software

A key idea with Talkies is its "malleable software" approach—creating systems users can personalise without programming knowledge. Inspired by the writings of Geoffrey Litt, the system lets users easily refine existing list templates or create entirely new list types based on their needs. The AI understands the intent behind these lists and updates them as family requirements change.

"It's really exciting to think of this concept of malleable software as emerging... having this flexible layer of manual software that we can invite users to personalise and make sure they feel empowered in this AI process."

This shows how AI can transform software development, allowing personalisation at a level previously impossible without technical expertise. It bridges the gap between developers and users, creating more adaptable, human-centred experiences.

A collection of custom list templates created by users, showing the flexibility of the system

The code generation workflow allows for easy customisation of list templates. View a demo here

AI-Assisted Development

I'm an early adopter of AI-assisted development. Throughout the 9-month process, I relied on AI to generate all the code for Talkies — predating "vibe coding" but embodying that approach completely. My process evolved from using ChatGPT to help write code I'd copy into VS Code, to Claude when Sonnet 3.5 was released, then a Claude Project, and finally Cursor with Claude 3.7.

I think I had the right profile to be an early adopter. I was always happy to be hands-off with the shape of the code, which for a seasoned developer I think is hard to embrace. I also have enough web dev experience to take over and help the assistant reason through problems. This includes knowing how to find logs, some intuition about common problems with Next.js and React.

I also think I have good taste for open source software. Several early decisions proved crucial:

- Choosing Chakra UI, a design system well-known by AI models, which improved code quality and consistency

- Using TinyBase for local-first data management, simplifying what needed to be explained to the AI

- Implementing Clerk for authentication as an off-the-shelf solution easy to implement with AI help

- Using Cloudflare with its straightforward configuration for simple deployment

Ironically, the hardest part was implementing frontier lab SDKs from the very companies creating these AI models. These SDKs tend to be poorly documented, under-developed, and not well understood by the models themselves—highlighting current limitations in the AI development ecosystem.

My view is that most developers are still wary of AI-assisted code workflows. Writing working code is just one output of a development process, and there are lots of valid questions about how these workflows fit within the principles of engineering.

ChatGPT, Claude + artefacts, then Cursor.

Balance of Automation and Control

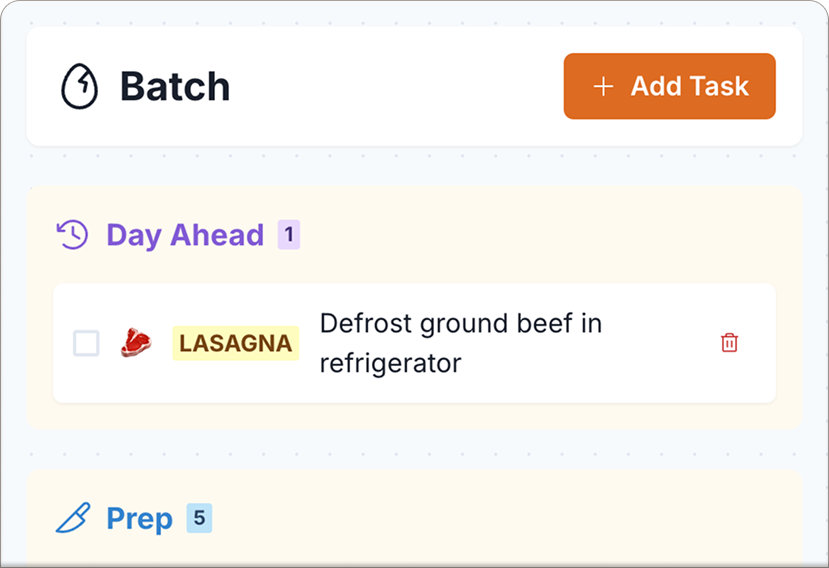

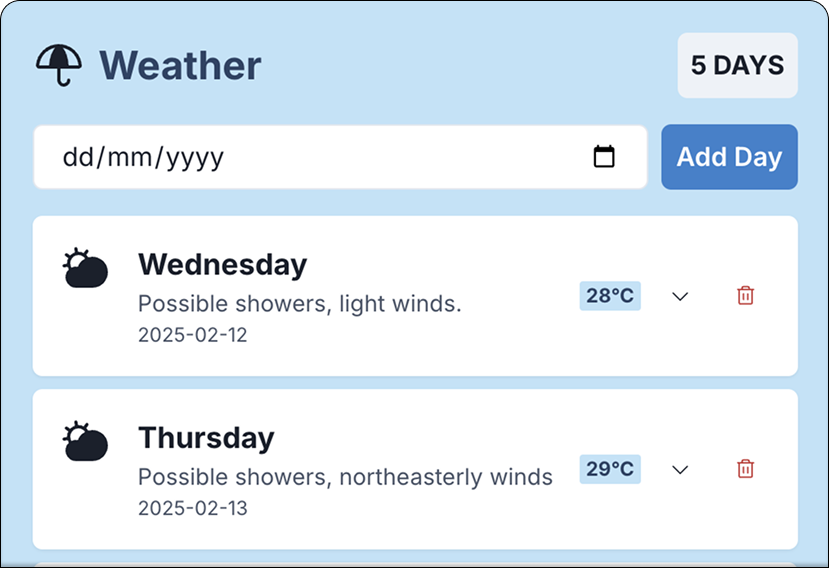

Not everything can be automated. Sometimes work is the point. A happy accident with Talkies is that the topic of mental load represents a body of labour, of which some portion can be automated to everyone's benefit, and another portion where the labour is the point. The application balances automation, control, and collaboration to empower users while respecting their agency.

The core principle is that AI should help users offload structured experiences and work through routines, but the output stays under human oversight. Generated lists can be placed on a shared board giving the family visibility and oversight. Users can modify tasks using voice commands, maintaining a natural interaction that keeps humans in the process.

The reasoning feature shows this philosophy in action. The system can take family context and generate multi-step plans. But rather than automatically executing these plans, it presents them for human review before handing them to the assistant AI for implementation. This mirrors how Cursor works, where a fast model implements code changes as instructed by a more sophisticated reasoning model—but always with human oversight.

'Think' the reasoning feature can look over multiple lists at once and make sensible decisions.

Users, AI and Privacy

User testing drove Talkies' development, validating UX patterns and driving iteration. One advantage of a general-purpose application is finding testing participants is easy—almost everyone manages lists in some form.

The research focused on direct observation of users, particularly with voice interactions. For many participants, Talkies was their first experience with conversational AI beyond Siri or Alexa. Watching their initial excitement—followed by rapidly increasing expectations—showed how quickly users adapt to and then demand more from AI interfaces.

Voice interactions revealed both delight when interfaces work seamlessly and frustration when they fall short. This highlighted the gap between what users expect from AI (often influenced by science fiction) and what current technology can reliably deliver.

Privacy concerns emerged as a significant theme. Through participant conversations, I developed a nuanced understanding of users' comfort with data sharing and AI. I observed increasing anxiety around AI services over the nine-month testing period, culminating in one participant declining to use voice features entirely despite the local-first approach.

Photos of user testing sessions showing participants engaging with the app

Conclusion and Impact

Talkies is more than a task management app—it shows a new approach to software that empowers users through AI-enabled flexibility. By tackling the real-world problem of family mental load with multimodal, adaptable interfaces, the project shows how thoughtful design creates meaningful impact.

The journey from concept to product illustrates my approach: clear problem identification, rapid prototyping, extensive user testing, and continuous refinement. More importantly, it shows my ability to balance technical possibilities with genuine user needs—a critical skill for creating developer experiences that enhance productivity and satisfaction.

This case study demonstrates my capacity to lead end-to-end design for complex features while collaborating with technical teams to create solutions that are both innovative and practical—skills needed for making the most of the AI wave.

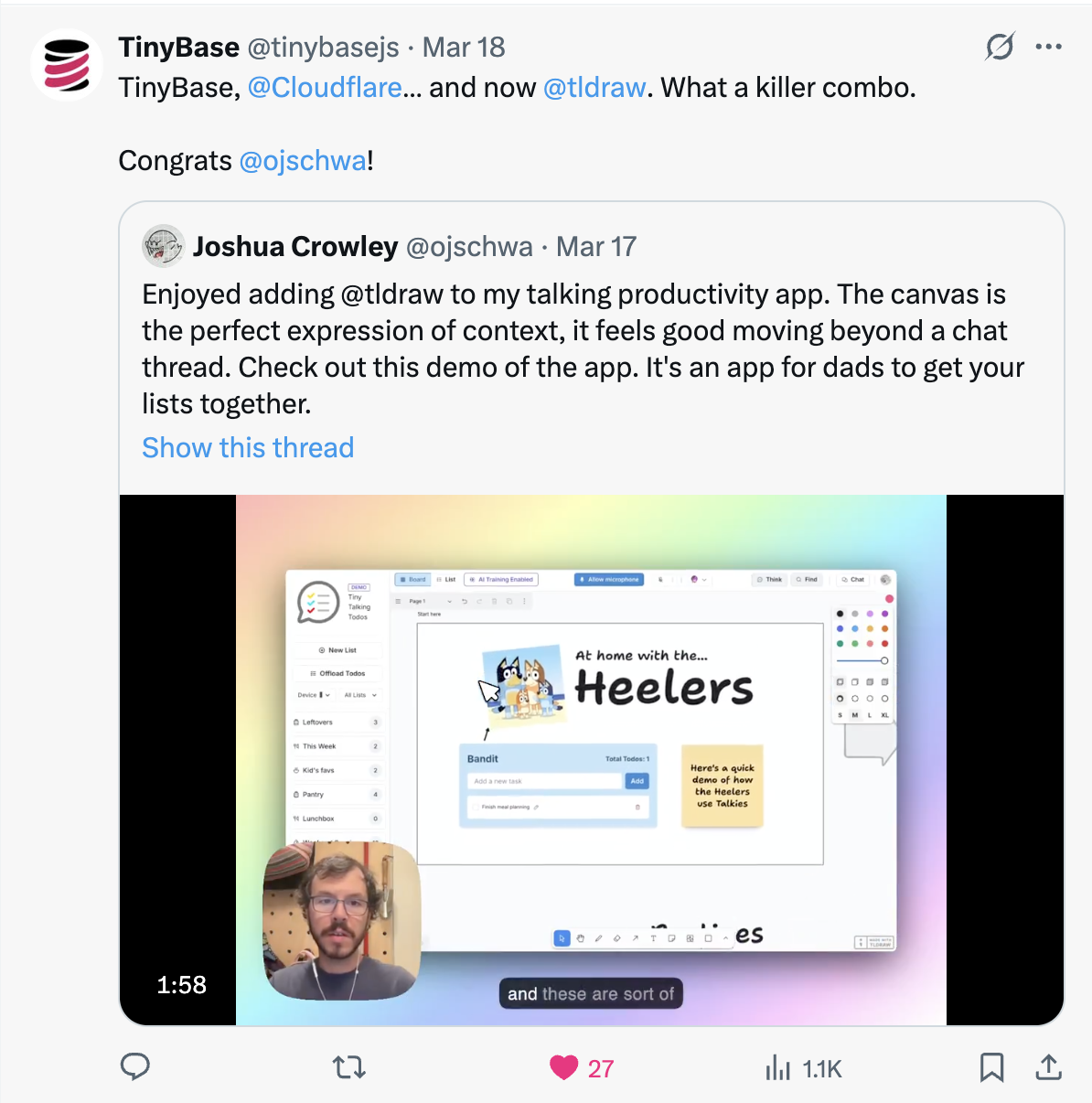

Twitter posts have been a great source of motivation, amazing how quickly ideas are travelling in this space.