Tiny Talking Todos

Introduction

Tiny Talking Todos (or Talkies) is a web-based AI project I'm building, available to try at tinytalkingtodos.com. Talkies is a personal knowledge base with a voice assistant, the "Operator." You can store and manage knowledge as lists and tasks, from simple to-do lists to complex, contextual tools like temperature trackers or meal planners. The Operator lets you query and perform multi-step transformations without a complex UI. There's a growing template library, plus the option to create new templates with Claude 3.5 Sonnet.

Motivation

Earlier this year, I built a series of AI prototypes for the kitchen called Omnivore. I took these prototypes on the road, and showcased them at a workshop on multi-modal AI to fellow designers at Design Outlook. After my workshop session, designers wanted to know, how can they prototype these experiences without diving into code?

I really wasn't sure. I had open sourced the material in the workshop, so you could tweak my prototypes – but for most designers this is still an non-starter. It is possible to use tools like OpenAI's Playground to test out APIs and see what kind of responses/chats you could have. But you can't bring your UI, nor persist the responses from the user. I got the sense the designers were a little disappointed with my response.

Little did I know, I soon had the answer in hand – literally. Traveling back from the airport, looking at my iPhone, Anthropic had just announced their new model Claude Sonnet 3.5 feature "Artifacts", a groundbreaking UX pattern that allowed LLMs and Users to collaborate on code.

A very early prototype

My goal then became: how can I enable someone to prompt their own prototype, an artifact that could plug and play into a multi-modal interface – without needing to code? I gave myself the constraint of focusing on a Todo list app, and the user would be able to create variations on the todo list.

Pretty quickly, I had a rough as guts version of "Talking Todos". Here's a 3 AM explanation from a barely functional me and my prototype.

"Talking Todos" proved to me that this was feasible idea with lots of directions to go in. It was still a very delicate idea however. It took many prompts to make a prototype, and too much domain knowledge. The rough UX made it challenging to get into people's hands. It was expensive to run, and my non-coders would still need to deploy it somewhere to get it up and running. So my Talkies todo list started to grow, and I got to work on the next version.

TinyBase

A good decision I made when refactoring the app was to double down on using TinyBase, which previously managed a small amount of user state. It's an exemplar project for building with a local first approach. If you follow the local first ideology, you get a very fast, very private and easy to host app that ticks a lot of boxes for me. So I did, and soon Talkies was a client side app, that stores your data in localStorage on device. The TinyBase project is extremely well documented, and all the examples double as unit tests for the project. I've copied/pasted select files into text references for my LLMs and it greatly improves the quality of the code.

Shipping

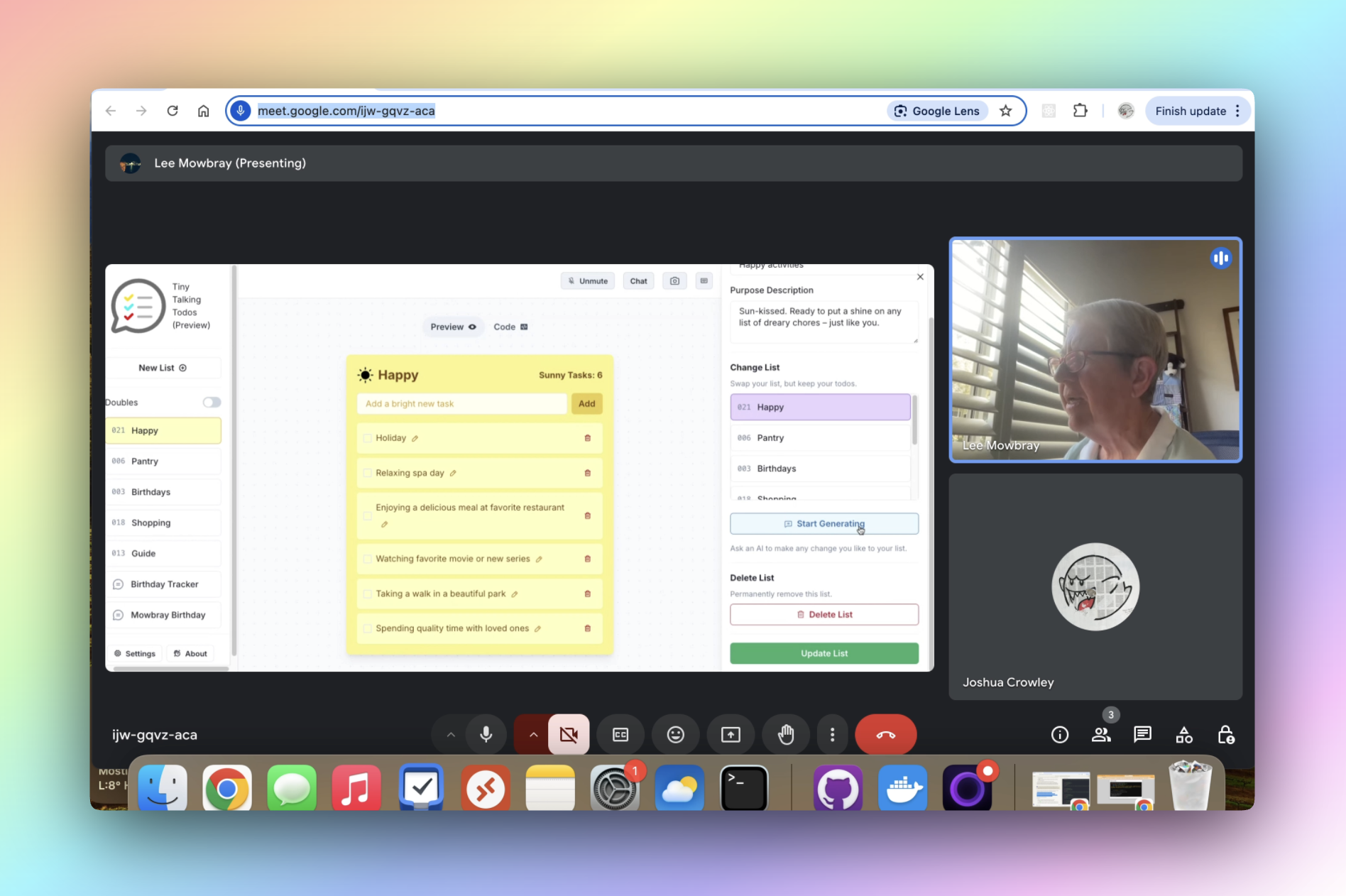

So after a couple of months of late night coding a few times a week, I shipped a preview version of Tiny Talking Todos. Limited in scope, there's no logins/accounts, no syncing and you need to bring your own API keys to use the app. But what I had was a version of the app with an improved UX that I could test with users and begin to validate the value proposition of the app. Here's some of the key features of the app:

Catalogue

There's a catalogue of list templates, which users can add to their collection. As I experimented with codegen, it became clear that I needed to provided many relevant code examples help steer the output of the model. The Catalogue helps educate the user about what things they can tweak or do with this lists, which turns out is more than just a todo list.

Each list can vary quite a bit in functionality but work the same under the hood and are interchangeable, so the same collections of todos can be used with different lists. Each list provides different instructions to the operator, which the user can configure. These instructions are quite powerful and can affect the operator's behavior dramatically.

Vision

Using GPT-4o vision capabilities I can generate one or more todos, completely contextual to the list using a photo from the user. The photo could be a recipe page, a list of written todos, a bookshelf with book titles, or a screenshot of your calendar. Usually with vision, you need a careful prompt and schema, and that can add some complexity to generating output. For Talkies, as we can provide the current list items as examples to the prompt, it increases the chance of a well-aligned response. We also use structured outputs to ensure that the responses from the photo always match the schema of our store.

Custom lists

The genesis of Talkies was that you can build your own prototype via an Artifact-like pattern and experiment with voice interactions for different contexts. As each list is just a React component, we can use Claude Sonnet 3.5 to rewrite the component with new requirements, and we can do so pretty quickly and cheaply thanks to prompt caching with Claude. The system prompt for this is under constant iteration and relies on content from the Catalogue. I'd say a key to the success has been TinyBase's excellent documentation, as I rely on Claude to rewrite the functionality to read and write data from the store as required.

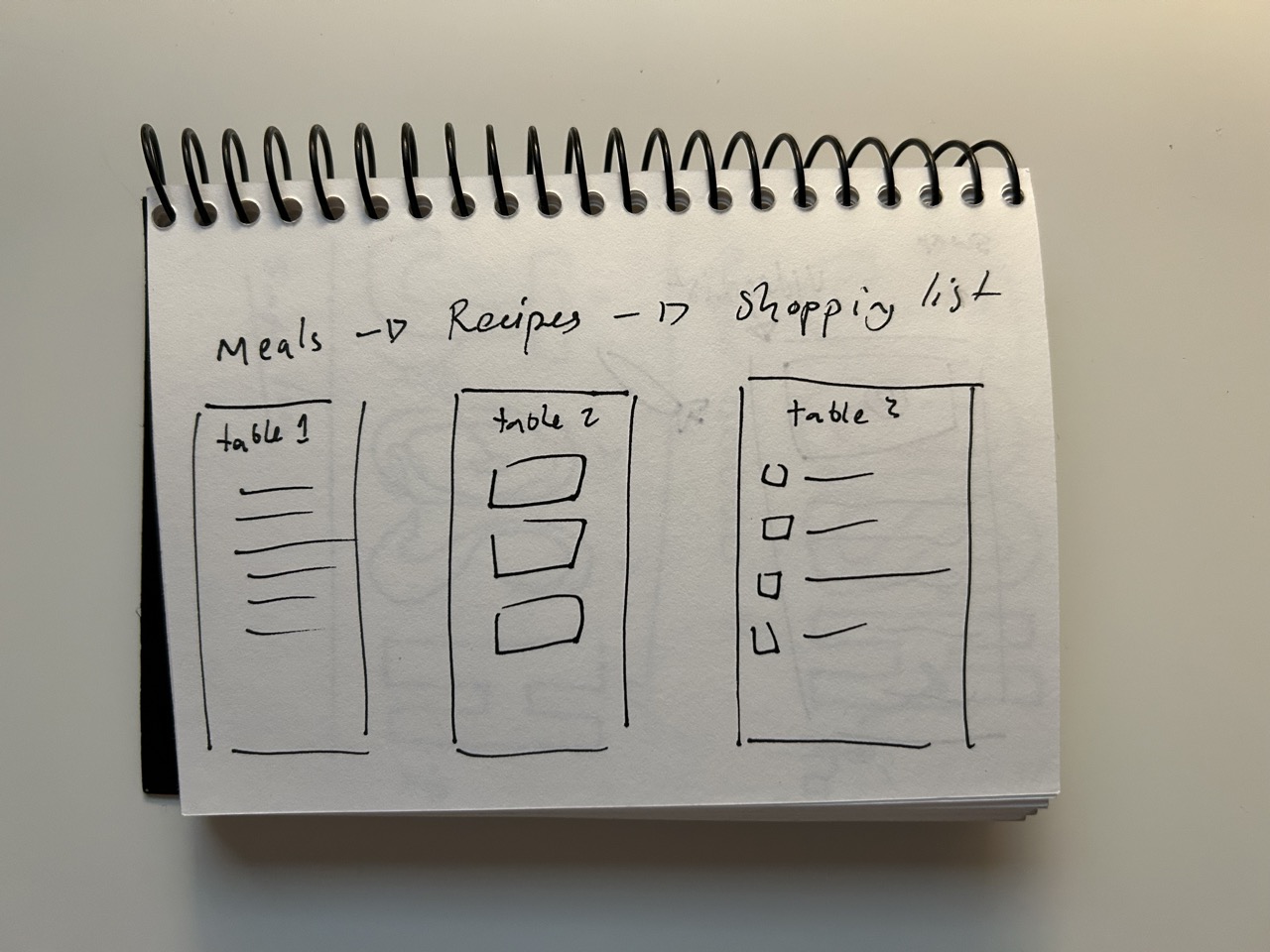

Transforming todos

I love this last feature, as it surprised me and continues to impress. Frontier models like GPT-4o and Claude Sonnet 3.5 can reason very well over data you provide in context. By simply using your voice, you can ask the Operator to perform complex operations on one or more lists. For example, you can quickly move recipe ingredients into a shopping list or turn a list of received gifts into thank-you notes. It's crucial to have an easy way to undo these operations. TinyBase offers undo/redo functionality out of the box, so you can safely roll back one or more operations.

Reflection

It's worth pointing out that my goal has shifted over this period. My initial goal, for a simple way to build a multi-modal prototype shipped, but it still needs lots of work and supporting code examples to be reliable. I also need user input to discover what changes they'd like to see.

What I didn't realise, was that by building a system that handles many lists with shared access to data – I was creating a handy knowledge base that scales beyond one list, to many lists and many use cases. Furthermore, the Operator was proving very useful for quickly creating, updating and finding data in these separate lists, with would otherwise be a big UX challenge.

Diary entry – 17th of June

Talking Todos could be a great standalone product?

- Generate 100+ todo templates, mix of hand crafted, generic, weird ones.

- Show how you can customise them, mix them.

- You can talk to the app to find which one you want, get advice.

- Talk to the app to use the lists.

Q: Why would you have heaps of todo lists? A: Different spaces and context. The idea is that the todo code itself, is a great conduit for setting context for the voice assistant. Because the voice assistant can help you discover and interact with the UI naturally, you can have varied interfaces.

User Testing

As exciting as all the components of Tiny Talking Todos are to me, how do I take this project and make it useful as an actual app for you? Well, of course, as a UX designer, there's nothing more grounding than a user testing session, so I organized 4 different sessions with users to unpack the app. Here are the main discoveries we had together:

UI as a Contract

All the testers were pretty confident on how to engage the app with their voice from the get-go. Well, once they figured out how to enable their microphone and start talking to the AI - there was quite a bit of design iteration on that UI, and more to do.

As experienced in the artifact pattern having a "Thing" to talk about, to create a shared understanding with the LLM is really helpful. Skeuomorphism, eat your heart out. What remains a challenge is to quickly ramp up the user to giving complex multi-step operations to the Operator. I built a tutorial to help prompt the users into being more greedy with their own prompts, and it was fun to see users' expectations increase. It would help express the main value proposition of the app, that if you invest time into bringing your data in, you'll save time in the future by being able to have an operator help extend and modify it.

Presently, I send a copy of the UI's code to the assistant to help them understand the affordance of the list that the user is talking about. So for example, one of the lists has a constraint on it, where you cannot have more than 3 items on the list, and the UI code reflects that, disabling an input when the list count is 3. And with my user testing so far, this goes a long way to steering the assistant as well, who will refuse to add extra items to the list, despite there being no other mechanism to block that condition.

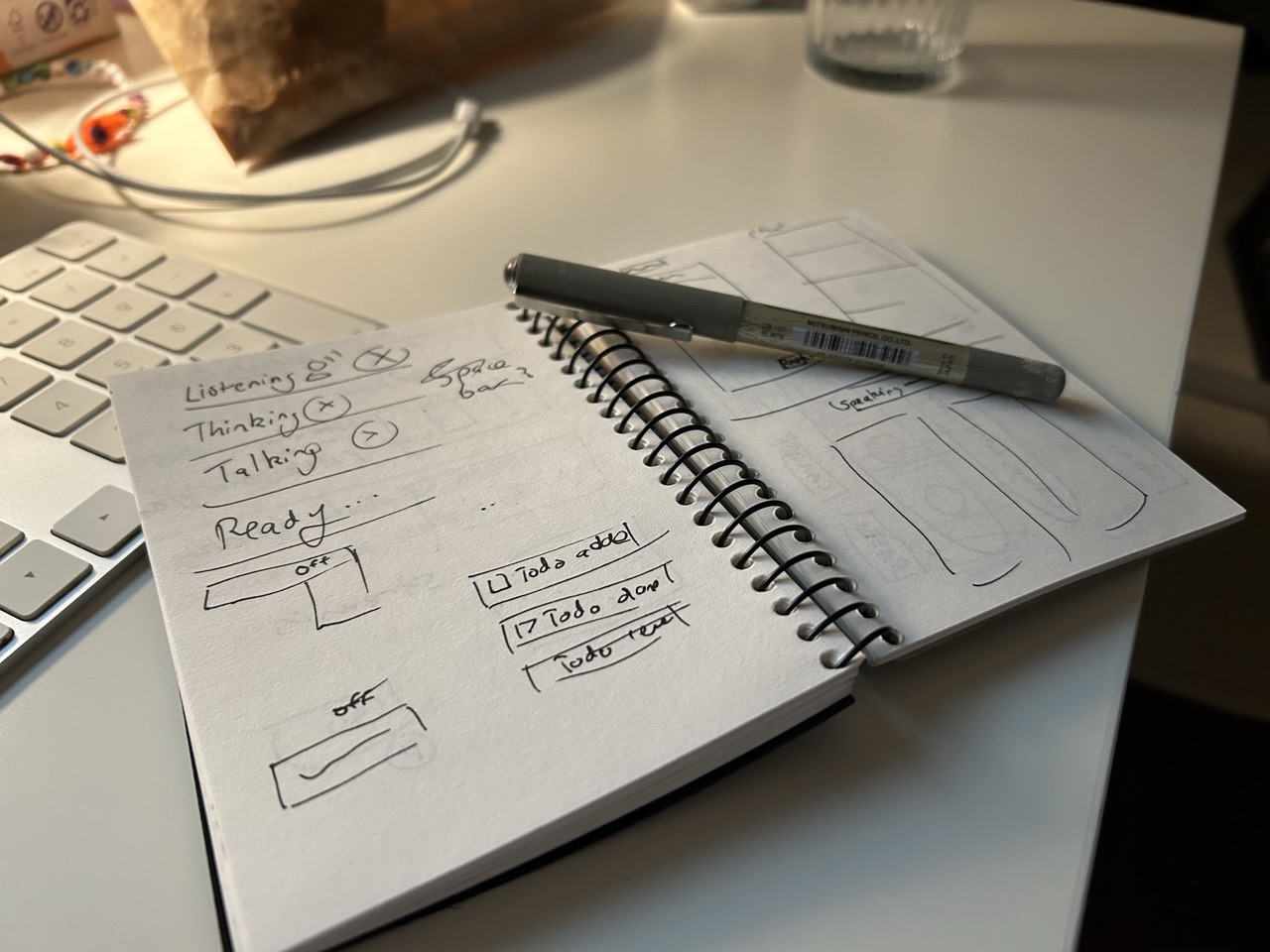

Finding the Right Amount of Voice

If you pipe an LLM output straight into voice, it's just too chatty for most tasks and also prohibitively pricey as of September 2024 for personal use. There is a dream of a human-like conversation with AI that feels fluid and fun, as expressed in the demos of services like GPT-4o. After my experimentation, I'm a little skeptical. I think we mostly want to be in conversation with the UI/screen and not a bubbly assistant. But of course, it will be powerful to be able to just talk and use the app, without a screen as well.

In Talkies, my approach is that users have an "Operator". They're not conversationalists; instead, they're narrating the UI and your interactions with the underlying services. How Operators are expressed in Talkies needs more thought. To achieve this, I prompt the LLM to always include a speak tag <speak>Apple Added</speak>, which triggers if the record has been created in TinyBase.

We had a lot of fun in the user testing sessions chuckling at the Operator's responses. It became very clear with each participant that the amount of voice was quite personal, and more control was needed, and even the option to turn off the Operator's voice altogether.

Generate Your Own List

I've iterated how I explain this concept to users a few times, as it's such a foreign idea that the user interface is malleable at all. In our sessions, users needed quite a bit of guidance on how to prompt it, often lacking the terms or frame of reference on how to tweak a UI – that a designer or developer would know. Common tweaks are simply the name of the list or the color, and I've worked on making these configurable via voice or UI without needing to regenerate the list. Again, this is an area I'm keen to iterate, and in some ways, I've taken the pressure off this concept with a catalog of pre-made lists, which users can use and eventually may tweak without much fuss. Meanwhile, this feature has been a boon for me, as I'm able to quickly make new lists for the catalogue.

My sessions with users have been the highlight of the project so far. There's a high bar for anything as general-purpose and consumer-facing as Talkies thinks it might be. My experiences with users so far, their reactions to the Operator speaking clearly for the first time, or being impressed as they attempt to confuse the Operator with tricky commands, is a constant source of energy.

Closing Thoughts

While I'm very proud of my progress, my closing thoughts are on my process. Throughout the project, I've kept both a pocket sketchbook and a diary of notes on my iPhone, always on hand. These are habits I've had in the past, but they've never felt so necessary as before. There are just so many ideas floating around, new models, new techniques, new thinking. I feel like a blacksmith working urgently on a smoldering idea, smashing out the impurities while it's still hot and burning, before plunging it into icy water to see if it shatters or makes the Talkies stronger.

I never sit down in front of the computer now without a plan on what to work on and a clear goal for the session. With past side projects, it's easy to bumble around the codebase, endlessly optimizing or burning out on a bug. As I've used Cursor and Anthropic's Sonnet 3.5 throughout this project as a coding assistant, and it feels absolutely electric to build features so quickly, to refactor code so effectively. I can explore stuff I have no experience with, like the Audio context feature I discussed, where I could focus on the idea and not the implementation details.

For me, where I'm at as a hybrid programmer/designer, this process really ticks all my boxes. For whatever happens next, I'm thankful to have a chance to learn and progress my craft with within this moment and all the new affordances I get. Talkies will be successful if I can do that for others.